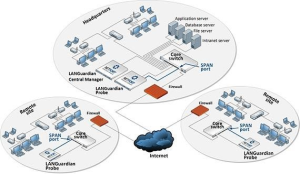

During a customer review call last week, we got a very interesting quote from a US based user who offers marketing services to the retail sector: ‘We need greater insight over what is taking place on our internal network, systems, services, and external web farm seen through a single portal. We need to keep downtime to a minimum both internally and on our external customer-facing web farm. We chose LANGuardian because of its integration with SolarWinds and its deep-packet inspection capabilities.”

Before discussing this in more detail, because of all the hype these days we also always ask about cloud now, so when we asked this contact about hosting these critical services in the cloud, he countered with 3 reasons for keeping them in house:

- Security

- Control

- Cost

When drilled on ‘cost’ he mentioned that they were shipping huge amounts of data and if hosting and storing this in the cloud, the bandwidth and storage related charges would be huge and did not make economic sense.

Back to Deeper Traffic Analysis, turns out this customer had already purchased and installed a NetFlow based product to try and get more visibility and try to focus on his critical server farm, his external/public facing environment. His business requires him to be proactive to keep downtime to a minimum and keep his customers happy. But, as they also mentioned to us: ‘With Netflow we almost get to the answer, and then sometimes we have to break out another tool like wireshark or something. Now with Netfort DPI (Deep Packet Inspection) we get the detail Netflow does NOT provide, true endpoint visibility.

What detail? What detail did this team use to justify the purchase of another monitoring product to management? I bet it was not a simple as ‘I need more detail and visibility into traffic, please sign this’! We know with tools like wireshark one can get down to a very low level of detail, down to the ‘bits and bytes’. But sometimes that is too low, far too much detail, overly complex for some people and very difficult to see the ‘wood from the trees’ and get the big picture.

One critical detail we in Netfort sometimes take for granted is the level of insight our DPI can enable into web or external traffic, does not matter if its via a CDN, or proxy or whatever, with deep packet inspection one can look deeper to get the detail required. Users can capture and keep every domain name, even URI and IP address AND critically the amount of data transferred, tie the IP address and URI to bandwidth. As a result, this particular customer is now able to monitor usage to every single resource or service they offer, who is accessing that URI or service or piece of data, when, how often, how much bandwidth the customer accessing that resource is consuming, etc.

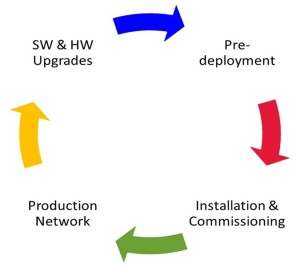

Users can also trend this information to help detect unusual activity or help with capacity planning. This customer also mentioned that with deeper traffic analysis they were able to take a group of servers each week and really analyze usage, find the busiest server, least busy, top users, who were using up their bandwidth and what they were accessing. Get to the right level of detail, the evidence required to make informed decisions and plan.

CDN(Content Delivery Networks) usage has increased dramatically recently and are making life very difficult for network administrators trying to keep tabs and generate meaningful reports on bandwidth usage. We had a customer recently who powered up a bunch of servers and saw a huge peak in bandwidth consumption. With Netflow the domain reported was an obscure CDN and meant nothing. The LANGuardian reported huge downloads of data from windowsupdate.com from a particular IP address and also reported the user name.

What was that about justification? How about simply greater insight to reduce downtime, maximise utilisation, increase performance, reduce costs. All this means happier customers, less stress for the network guys and more money for everybody!

Thanks to NetFort for the article.

Infosim®

Infosim®